Labrador Labs (CEOs Jinseok Kim and Heejo Lee) announced plans to strengthen the AI supply chain to proactively address cybersecurity threats and licensing issues caused by open-source AI models.

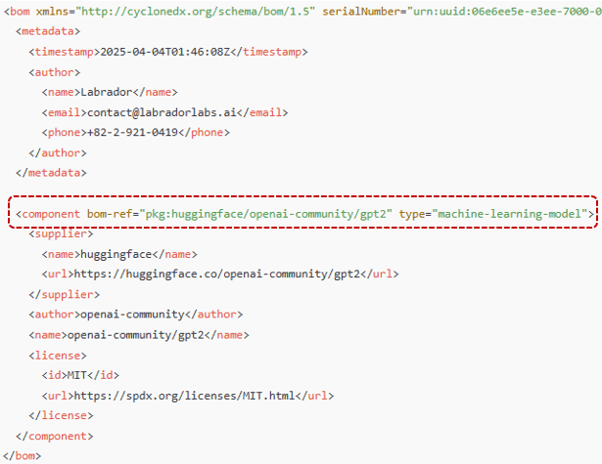

The company explained that although the AI Bill of Materials (AI BOM) standard has not yet been fully established, it has preemptively implemented extended features based on the existing SBOM (Software Bill of Materials) framework to reflect AI model characteristics. Labrador Labs also built a flexible structure and data schema design that can immediately adapt when AI BOM standards are finalized. The system adds functions to automatically detect models, check code locations, model sources, and reliability.

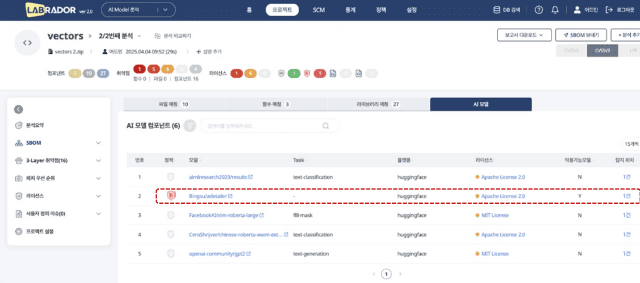

The “Labrador SCA” platform classified the YOLO-based object detection model “Bingsu/Adetailer,” registered on Hugging Face, as a potentially malicious model based on its supply chain attack history and security warnings. This model alone was downloaded more than 21.5 million times last month, making it widely used and a potential security risk.

As generative AI adoption accelerates, companies and organizations are actively incorporating open-source AI models. As of 2025, more than 1.5 million AI models are registered on the Hugging Face platform, with over 200,000 daily downloads. Labrador Labs’ internal analysis found more than 1,700 exploitable AI models in open-source repositories.

[Labrador AI Model Analysis Screen]

[Image showing the results of AI model detection added to the existing SBOM standard.]

These exploitable models may contain backdoors or malicious scripts. Other risks include unauthorized license changes and tampered training data. If a company downloads an AI model with a backdoor, attackers could gain internal network access and steal sensitive information, such as customer data or business secrets. Exploitable models can also corrupt or falsify training data, leading to biased outputs or errors in critical decisions.

Numerous cases of license violations have been identified among open-source AI models. If datasets are used without consent or copyright is infringed, companies could face lawsuits and compensation risks. In one case, a backdoor was discovered in an AI model uploaded to GitHub. Another incident involved a malicious installation script distributed along with the text-to-image generation model Stable Diffusion.

Moreover, large language models (LLMs) have been reported leaking personal data used during training. Labrador Labs emphasized that companies must now assess the reliability and safety of AI models before deployment to mitigate supply chain risks.

The “Labrador SCA Platform” automatically identifies the usage history, source, license status, and presence of malicious code in AI pre-trained models. It helps proactively manage AI-related security threats at the SBOM level. The platform also prevents supply chain risks by detecting untrustworthy AI models or code before integration. This approach helps prevent legal risks from AI model license violations while boosting transparency during security certifications and compliance checks. SBOM automation data can also be used for internal audits and regulatory reporting.

Labrador Labs reiterated that although AI BOM standards are still evolving, its system already supports expanded functions reflecting AI model traits and can quickly adapt once standards are fully defined.

[Translation from original article (Korean)]

https://zdnet.co.kr/view/?no=20250407170148